It’s a New Year, but the same topic is dominating the technology industry — Generative AI.

A recent article in The Economic Times highlights IDC’s forecast that enterprises globally will invest $143 billion in Generative AI (GenAI) by 2027, with a compound annual growth rate (CAGR) of 73.3%. This spending includes GenAI software as well as related infrastructure hardware and IT/business services.

Some people are still struggling to get their arms around the basic premise: What is GenAI? Here’s a simple straightforward answer: GenAI is an AI capability to “generate” content based on data points fed to base machine learning (ML) models. The technology can summarize text, expand text, perform a sentiment analysis on a piece of information, extract information from a dataset, translate language, perform text-to-image conversion, and a host of other tasks.

The next question: Why GenAI? Or more precisely, why is GenAI creating such industry buzz? This is largely due to its enormous potential impact on business and technology operations. There are many use cases where GenAI outputs are already in use, and others in development which require more fine-tuning and alignment to become productive and profitable.

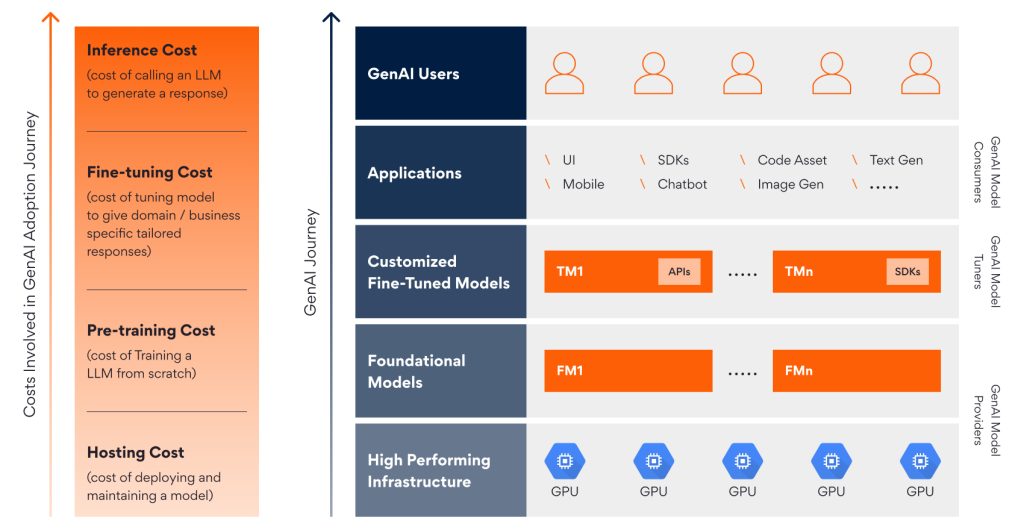

Now comes perhaps the most critical question for any enterprise looking to capitalize on this trend, and one that will drive a large share of enterprise investment going forward: How GenAI? For one potential answer on this question, let’s start with the diagram below.

As seen in this diagram, in a GenAI journey for any organization, there are three main motions involved as described below:

- Model Providers: Model Providers give input to base large language models (LLMs), sometimes known as foundation models (FMs), that are pre-trained on a massive dataset which can be further tuned for custom needs. Massive amounts of time and money go into building FMs from scratch by training these models on billions of data points. Generally, large organizations with sufficient budgets try to build FMs own their own and provision high-power and high-performing servers to build/train these models. Some of the better known FMs include Google’s BERT, OpenAI’s GPT, and Amazon Titan. This motion within an organization incurs costs associated with infrastructure hosting as well as computational costs for building/training these LLMs.

- Model Tuners: Model Tuners take FMs suitable to their use case as base models and extend, customize, and fine-tune them with custom data-points, mapped to organizational/domain-specific needs. These customized models are then deployed in environments and integrated with an organization’s other applications to fulfill business requirements and provide more accurate and relatable insights. This motion incurs costs for building datasets, fine-tuning the FMs, and hosting the customized FMs in an organization’s environments.

- Model Consumers: This group uses either pre-existing purpose-specific FMs already fine-tuned for a use case by the publisher or consume fine-tuned models and integrate them into solutions by making API calls to the model or using published SDKs (or even use web-based playgrounds to test the models). ChatGPT’s web-interface is an example of a Model Consumer application. This motion mostly involves paying for the usage of the models (generally based on number of input and output tokens of the GenAI API call) which are available as SaaS offerings from vendors/providers.

An organization may start its GenAI journey with any of the above motions based on business needs, budgets, time-to-market targets, etc. An in-depth analysis of key use cases and which FMs are best suited for those use case requirements are the very first steps to embark on this journey. An unclear use case with no specific expected outcomes or the selection of an unsuitable FM or poorly trained hallucinating models can result in a financial disaster, with long-term impacts on an organization’s reputation and operational efficiency.

Persistent Systems is a trusted strategic partner for companies still grappling with the “What, Why, and How” of GenAI. We are dedicated to collaborating with clients throughout their GenAI adoption journeys with an established Center of Excellence with hundreds of certified professionals. We are also working on deploying clients’ GenAI use cases across various vertical industries such as banking, insurance, healthcare and life sciences, leveraging a variety of in-house accelerators and the skills of our hyperscaler partnerships for faster use case conversion.

Ready to learn “How” to move forward? Visit our GenAI site for more details on our expertise and offerings.

Author’s Profile

Pooja Arora

Senior Payments Architect