Independent software vendors are looking for every opportunity to accelerate their digital transformation and get more done quickly for less cost, and three trends—hybrid cloud, containerization, and microservices—are delivering the desired results, even in the current pandemic.

At the same time, enterprise organizations worldwide—feeling increasing pressure to accelerate their own digital transformations—are focusing their own IT budgets on software partners able to help them speed their own journeys.

“By 2023, more than 70% of global organizations will be running more than two containerized applications in production,” according to a 2019 Gartner report [subscription required], “up from less than 20% in 2019.”

Containerization allows developers to create and deploy applications faster and more securely by bundling the application code together with all the configuration files, libraries, and dependencies necessary for it to run.

The resulting container delivers faster time to market for new applications, with the added benefit of greater resource utilization and flexibility across increasingly common hybrid- or multi-cloud infrastructures—the exact conditions needed to accelerate innovation and thrive in the current pandemic environment.

But containerizing your application isn’t a destination – it’s simply the first step in the journey towards full application modernization and digital transformation. So, what happens when it’s time to move beyond version X to version X.1?

New architectures require new extension approaches

Consider an independent software vendor looking to harden their own product by installing third-party security software on top of their own. The installation would help the ISV maintain regulatory and security compliance across all their deployed products, both on-premise and containerized.

Obviously, the installation process has to be compatible with the workload deployment, upgrade and failover protocols for containerization, which differ greatly from on-premise routines that follow different deployment or configuration patterns specifically designed to deploy standalone, clustered, sandbox environments ready for customer use.

Before the advent of containerization, these processes were crafted for two hosting options: bare-metal or virtual server-based deployment. So naturally, the automation tools, scripts, and operational procedures followed suit.

These procedures not only involve the deployment of the product but also customizations and integrations with third-party applications, which can sometimes enable features that require configuration changes.

Using pre-created images for entire virtual servers and applying configurations on them may work well for monolithic on-premise deployments, but the elasticity and lightweight nature of containers requires a new set of processes and best practices that take into account:

- Individual containers for microservices

- Restricting privilege and shell access

- Image vulnerability scanning, signing and more

Just as importantly, the packaging or image build and preferred deployment model need to adhere to existing enterprise security guidelines, which can evolve rapidly.

Deploying to maximize the benefits of containerization and Red Hat OpenShift

Deployment of containerized workloads delivers some unique advantages over on-prem, including more responsive scaling, auto-healing, and maintenance of state information such as logs, configurations, upgrades, rollbacks, and more.

IBM’s Red Hat OpenShift containerization platform offers some additional deployment flexibility on top of those standard benefits, including auto-scaling, dynamic placement, and an auto-healing function that can choose the node best suited to run the pods in the deployment.

But if the operational team must update individual containers with config and binaries after each upgrade or extension process, it can do real harm to key development metrics related to development efficiency and response times if deployments scale beyond 10-20 containers.

Some upgrades can also request root or administrative privileges which are not inherently available with certified images, forcing operations teams to individually enable access to allow such exceptions—another opportunity to erode key performance metrics.

The development teams at Persistent Systems have been modernizing and deploying legacy IBM applications on the cloud for the last several years, and we’ve developed a customization and extension framework that can help software vendors use Red Hat OpenShift to maximize the benefits of containerization and modernization without the same scalability challenges other containerization platforms can present.

The Persistent Systems Framework

Since the framework was built around IBM products, Persistent developers took advantage of IBM Cloud Paks, containerized versions of IBM products deployed on top of the Red Hat OpenShift platform.

IBM Cloud Paks can help organizations of all sizes accelerate their digital transformation and deploy applications quickly to transform their business and build new capabilities, but they still need a model to deploy customizations and extensions in a matter that is:

- Non-disruptive

- Consistent with best practices for containerized deployment

- Similar to the current customer experience

Here is one example of how the framework can address the extension and customization of a containerized product.

1. Containerizing the product

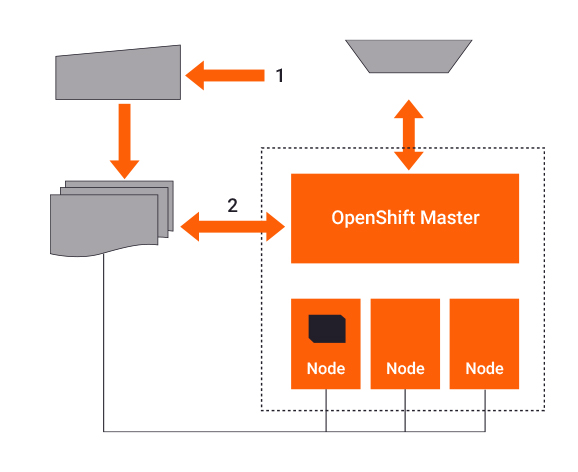

The customer begins by (1) deploying Version X of the product on the OpenShift Cluster, which is (2) verified by checking the registry to ensure the image is present. (3) The deployment pulls the image from the registry on the node selected for deployment.

At this point, (3) Version X of the image is operational, and (4) OpenShift now manages the lifecycle of the product.

2. Updating the product image

The customer creates Version X.1 of their product, and (1) pushes it to the registry.

The (2) process is non-disruptive to the current deployment and we get an additional image to be used with customer updates.

3. Deploying the upgrade

When the customer updates the image, (1) the product upgrade process is triggered. (2) The request will check the availability of image X.1 in the image registry, which (3) updates the image for all product pods in a deployment strategy defined by the customer, like rolling upgrades.

(4) Once the upgrade is successful, the pods are operational with updated image and config files, and (5) OpenShift is ready to manage the product.

4. Failure scenario

Since the framework will not only update the product image but also the deployment to use the updated image, (1) any failover scenarios will always get the updated image.

When the product is updated with the latest releases, the framework can be used to create updated images and apply the rolling update to the existing deployment.

It’s important to note the steps outlined here are specific to this scenario, and Persistent’s customization and extension framework itself is highly adaptable to any software vendor’s deployment needs.

This framework can also be run independently at customer premises and can be extended to take advantage of OpenShift’s native continuous integration and continuous deployment (CI/CD) capabilities.

With additional input from the customer, it is also completely extensible, allowing the creation of additional customer docker images as needed instead of simply updating customer products.

Accelerate your product deployments, extensions, and customizations with Persistent Systems

Persistent Systems has more than 2,000 containerization and Kubernetes experts on staff around the globe, ready to help your organization leverage Red Hat OpenShift to deploy better, more innovative software faster than your competitors.

Interested in learning more about Cloud Pak deployment with Persistent Systems?