In our previous blog we talked about creating adaptive, interactive and immersive Extended Reality (XR) experiences by leveraging Generative AI models.

One of the most important aspects and also key challenges in content development for XR experiences is creating realistic and immersive environments and 3D assets.

This is where Neural Radiance Fields (NeRFs) help create high-quality and high-fidelity XR environments.

Understanding NeRFs

Neural Radiance Field, or NeRF, is a powerful technique that allows us to generate highly detailed and realistic 3D representations of objects and environments based on a set of 2D images or videos. By employing neural networks, NeRFs can capture intricate details and realistic lighting effects, resulting in visually stunning, captivating and immersive XR experiences.

How does NeRF work?

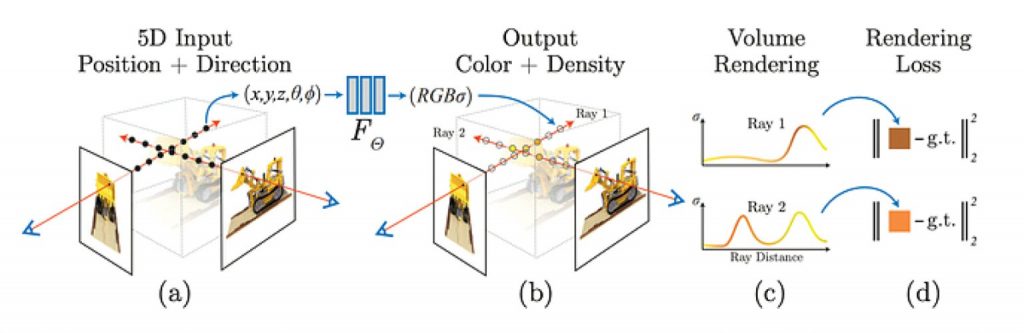

As seen in Fig. 1, this neural network takes input as 5D coordinate with parameters as spatial location (x,y,z) and viewing direction (θ,ϕ) and the output is the density of the volume and emitted radiance which is dependent on the view at that specific spatial location.

In the process, views are synthesized by querying 5D coordinates along camera rays and a classic volume rendering technique is used to project the output colors and densities into an image.

Additionally, rendering loss is calculated to ensure that the rendered images closely match the input images from different viewpoints.

Source: https://www.matthewtancik.com/nerf

The different stages involved in generating results from NeRF-based models are as follows:

- Data collection: NeRF models require a set of training data that consists of either a set of images or videos and corresponding camera poses captured from different viewpoints around an object or a scene. Typically, a controlled camera setup with a stationary camera capturing videos/images from different angles is used to collect this kind of data.

- Image pre-processing: Different software packages like Luma AI, Instant-NGP or Nerfstudio have their own extraction pipeline and use different approaches for this stage. We can also use COLMAP an end-to-end image-based 3D reconstruction pipeline by setting the ‘processing mode to sequential’ to determine camera poses. Luma AI’s own camera pose estimation pipeline is the clear winner for pre-processing ease.

- Training and optimization: NeRFs are trained using a combination of supervised learning and rendering loss. Each of the above platforms has different training processes. The neural network parameters are optimized by minimizing the difference between the predicted radiance values and the ground truth values captured from the training images. This stage is computationally intensive. Luma AI’s training takes less time to train NeRF as per the executions done for the models that we tried out.

- Rendering: A ray marching technique is employed to render a 3D scene using NeRFs. For each pixel in the image, a ray is cast from the camera position through the pixel and into the scene. The ray is then marched through the volumetric representation of the scene, sampling points along the way. At each such sample point, the neural network is queried to estimate the radiance value. The radiance values with the ray are integrated to compute the final color value for that pixel. This results in a photorealistic rendering of the scene. Luma AI offers pre-made orbit and oscillation paths and it is easier to see the path for our inputs on their editor than any other editors.

At Persistent, we have utilized the power of NeRF models for creating different assets for XR experiences and have seen the benefits of leveraging these models.

One such example of NeRF-based creation of a 3D model from a real-world object – chair – is as follows:

NeRF Applications in XR

- Augmented Reality (AR): AR applications can seamlessly blend realistic-looking high-quality 3D objects into the real-world environment, thus, accurately capturing and accounting for lighting conditions and occlusions. This opens up possibilities for interactive experiences, product visualization and designs.

- Mixed Reality (MR): NeRFs play a crucial role in merging virtual and physical objects in the MR experiences. They enable realistic 3D object interactions consisting of dynamic lighting and shadows, thus, providing users an immersive experience.

- Virtual Reality (VR) and fully immersive environments: NeRFs enable the creation of high quality, high fidelity, detailed and interactive 3D environments, which enhance the realism and immersion within these experiences. Users are able to teleport around, explore the 3D environments having lifelike objects with the same textures and materials as real ones and with the ability to interact with them in real-time.

- Digital twins: Digital representation of real-world spaces enable users to experience them as if they are physically present in the same space. NeRF enables the creation of such realistic-looking and high-quality 3D spaces, empowering businesses to showcase their services and products digitally as they can in physical spaces.

Benefits of using NeRF-based models in XR experience building

- High quality, high fidelity and enhanced realism: NeRFs provide unparalleled realism, offering users a compelling, immersive experience closely resembling the physical world. This increases user engagement and satisfaction with heightened realism and immersion.

- Time and cost-efficient: NeRFs streamline the XR development process by reducing the need for labor-intensive asset creation and optimization. This reduces development time and costs, making them more accessible and affordable.

- Improved interaction: NeRF-enabled XR experiences allow for more intuitive and realistic interactions with 3D objects and assets. It allows for more users to manipulate and interact with these objects placed in the XR experiences, with a level of responsiveness and immersion previously unattainable.

- Competitive advantage: Leveraging NeRF-based Generative AI models in building XR experiences provides a competitive advantage by enabling businesses to offer high-quality, high fidelity and visually compelling experience building. This improves customer engagement, loyalty, brand recognition and market differentiation.

Other techniques for creating 3D models of physical, real-world environments

Photogrammetry is another method of generating 3D models of different landscapes and objects using photographs. This process takes multiple overlapping pictures from different perspectives and angles. Processing these pictures using specialized software, one can create a point cloud and mesh representation of the object/landscape. This results in a photorealistic 3D model. Photogrammetry may be better suited for capturing fine details in the form of meshes and textures.

NVIDIA recently announced a new AI model – Neuralangelo, for 3D reconstruction using neural networks. This model can turn 2D video clips into highly detailed 3D structures. The technology will be blended with Instant NeRF to capture every detail. It has the capability to deliver the best of NeRF (excellent for stunning visuals) and Photogrammetry (excellent for surface details).

By learning the volumetric representation of a scene using neural networks, NeRFs can capture fine-grained, high-quality details and complex lighting effects. This enables NeRFs to produce highly realistic and immersive renderings of 3D models and scenes, making them a powerful tool for XR applications and beyond.

With expertise in XR technologies and Generative AI, Persistent provides consultancy, designing, development services and support to our clients for building AR, MR and VR solutions to deliver customized, intuitive and immersive experiences.

Contact us to explore more about our XR offerings.