The Ops movement is here to stay. Starting with DevOps about 12 years ago, the emphasis in automating build-test-deploy cycles through software has shown to bring clear benefits.

This blog gives an overview on the Ops movement, why it is important, and how DevOps has been extended to large segments of the software industry: to security (with DevSecOps), to analytics (with DataOps and ML/ModelOps), and to IT Operations (with AIOps).

We also briefly comment on the relationship between DevOps, AIOps and SRE (Site Reliability Engineering).

DevOps

Large software deployments from one environment to another generally used to be a clumsy, long, prone to failure, and poorly automated endeavours. The cloud changed all this, even though, for infrastructure as code to become a reality, it took many years to get the right abstractions (from the Unix shell to virtual servers on demand). DevOps was born around 2009, right after virtual servers on demand became available as cloud services and developers could write API-driven provisioning scripts to automate the build-test-deploy pipeline. The first Continuous Delivery text, now a book, lays out the basics:

- Comprehensive configuration management

- Short lived branches (in reference to trunk-based development) with continuous integration, and

- Continuous testing.

There is no official DevOps definition. SEI defines it as “a set of practices intended to reduce the time between committing a change to a system and the change being placed into production, while ensuring high quality”. Therefore, DevOps focuses on software delivery performance on the build-test-deploy cycles using the techniques described above, now referred to by the CI/CD acronym. DevOps and Agile address different software life cycles, so they complement each other. Most DevOps teams follow the Agile methodology for the design/dev cycles (start small, build incrementally in small batches, get early feedback).

Four metrics for delivery performance have been defined:

- Deployment Frequency

- Lead Time for Changes

- Mean-Time-To-Restore (MTTR)

- Change Failure Rate (CFR)

High DevOps performers are those that do well on these metrics e.g., multiple deployments per day, whereas low performers spend months on a single deployment. The State of DevOps reports since 2014 have shown that high DevOps performers are twice as likely to exceed organizational performance goals as low DevOps performers.

DevOps and SRE

DevOps is like SRE, an acronym that became familiar when the first book was published in 2016, in that it applies software engineering practices and tools to operations tasks. However, SRE expands DevOps’s MTTR and CFR availability metrics to a wider reliability concept that captures a service/site’s operational capabilities such as latency, performance, efficiency, change management, monitoring, emergency response, and capacity planning. The State of DevOps reports now includes reliability as an operational performance metric. The 2021 report finds that elite organizations, those prioritizing both delivery and operational excellence, also report the highest organizational performance. But there is room for growth: only 10% of elite respondents indicated that their teams have fully implemented all SRE practices being measured.

DevSecOps

Software has been found to be the weakest link when it comes to the security of the enterprise stack. Several factors contribute to this: many developers are ignorant of common security risks (such as these, highlighted by the OWASP Foundation); Infosec teams are often poorly staffed (1 Infosec per 10 IT Infrastructure per 100 Developers in typical large companies); and they are involved at the end of the delivery cycle, when it is painful and expensive to make the necessary changes to improve security.

DevSecOps extends DevOps by adding the Design and Development software cycles to DevOps’s Build, Test and Deploy cycles. It also adds one role: Infosec engineers, who help in designing and architecting applications. They make sure they provide easy-to-consume processes and libraries to reduce vulnerabilities, help with code reviews, and ensure that security features belong to the automated test suite.

Finally, there is a large and growing set of security (e.g., SCM, SIEM) tools that Infosec experts can help select and integrate with DevOps CI/CD processes (e.g., security scans to reduce vulnerabilities in containers).

DataOps

DataOps applies the same rigor of software engineering as DevOps to the development and deployment of software “data pipelines”, which govern the flow of data from its sources to consumption by analytics, either traditional BI reports/dashboards or advanced ML models. The purpose is to accelerate the delivery of data from the original concept/idea to the creation of charts, dashboards or models that create value while, at the same time, improving quality and lowering costs.

Importantly, quality applies not only to the software code within the pipelines, the databases, and the analytics code, but also to the quality of the data that passes through the pipelines in production. Indeed, the quality of the analytics insights and/or predictions depend on the quality of the data that passes through the pipelines. Therefore, DataOps takes care of an extra-cycle of the SLDC: monitoring after deployment. Specifically,

- It orchestrates data pipelines built with different off-the-shelf tools, and

- Control the quality of data at all stages of the pipeline.

DataOps, given its stated purpose, also covers the design and development cycles. The set of roles, beyond DevOps’s, covers business analysts, data stewards, data engineers, and data scientists. DataOps processes borrows well-known practices of the Agile methodology and software development. However, building dev/test sandboxes is challenging, more than in DevOps, as test data management is complex (getting representative data, privacy issues).

MLOps/ModelOps

When the analytics part of a data development project is an ML model, different practices and tools are needed to develop, manage, and govern them, in particular

- Experimenting with models: training, testing, validating, retraining and, once they are ready, deploying them.

- Governing models in production, i.e, making sure that predictions remain accurate over time (i.e., identifying and addressing model degradation), and that models adhere to all regulations and risk requirements and controls.

MLOps tools and practices help automate and keep track of the artifacts of the first part of the data science process, while ModelOps practices and capabilities provide the second part. In a separate blog we will delve deeper into practices, techniques and tools dealing with these aspects.

We have argued elsewhere that DataOps and ML/ModelOps practices and tools can and should coexist, as the model experimentation and governance part is specific to advanced analytics projects and the rest is common to both BI projects and ML projects.

AIOps

AIOps, Artificial intelligence for IT operations, is a term coined by Gartner in 2017 referring to the use of AI/ML technology in IT operations to prevent incidents, shrink resolution time and optimize IT resource usage. Rather than applying DevOps to a software domain (as in DataOps/MLOps), it is an evolution of what the industry refers to as ITOA (ITOps Analytics), which monitors IT environment data (machines, networks, logs, events, and metrics data) to gauge baseline performance and threshold parameters through statistical analysis.

The following challenges call for a new generation of services:

- Data volumes: fuelled by cloud and IoT adoption, Gartner estimates that the average enterprise IT infrastructure generates 2-3 times more IT operational data every year.

- Data segregation: ITOA tools work on sectionally segregated data, making it hard to combine these partial views to get a holistic understanding. Each tool generates its own alerts (e.g., infrastructure events and correlated performance issues), resulting in alert fatigue.

- Non-actionable insights: ITOA solutions react to insights raising alerts but lack orchestration with other tools to automate responses.

AIOps uses a big data platform to aggregate different IT operations data; runs DataOps and MLOps workloads to build models that correlate events, detect anomalies, and determine causality; and automates recovery actions through tool orchestration.

Both SRE teams and DevOps teams benefit from AIOps:

- SRE teams are applying root cause analysis and automated recovery actions to reduce response time and preventing incidents through proactive operations.

- DevOps teams are finding that AIOps technology can be used to learn about, and automate, the troubleshooting of applications that they support.

Summary

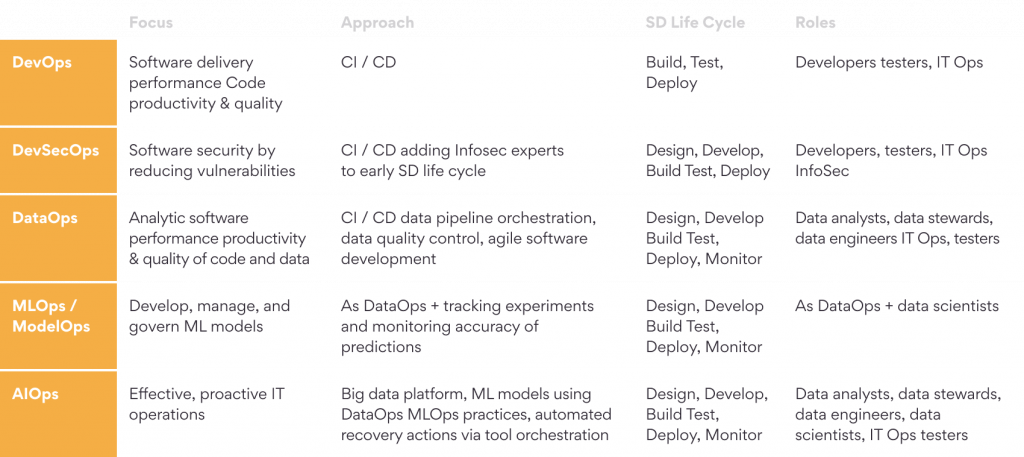

The following table summarizes the practices of the different incarnations of the Ops movement presented in this blog.

The Ops practices have rapidly evolved over the last decade and increasingly data & analytics is at the forefront of this evolution.