In recent years, the rise of generative artificial intelligence (AI) has sparked both excitement and concern. While these technologies hold immense potential for creativity and innovation, they also present challenges that must be addressed to ensure data security and their accuracy and authenticity. Navigating the complexities of generative AI requires a detailed understanding of privacy, transparency, and authenticity.

Privacy Concerns and Intellectual Property Risks

One of the foremost concerns surrounding generative AI revolves around privacy and intellectual property. As generalized public models are trained on vast amounts of data, there’s a risk of proprietary information leaking into the training data, raising concerns about data privacy and confidentiality. Moreover, models trained on nonpermissive licensed code may pose risks to intellectual property rights, potentially leading to legal disputes and challenges in protecting proprietary technology.

Ensuring Authenticity

Authenticity is paramount when it comes to GenAI generated content. However, several factors can undermine the authenticity of generated results. Factual errors and bias in the output of generative models can distort information and mislead users. Additionally, the occurrence of hallucinations, where models produce unrealistic or nonsensical content, can erode trust in the technology. The black-box nature of generative AI models further complicates matters, making it challenging to understand how results are generated and verify their authenticity. The presence of misleading or subpar solutions in the market can exacerbate these challenges, as some vendors exploit the hype surrounding generative AI to sell inferior products often leaning back to public models.

Lack of Transparency and Governance

Another significant obstacle is the lack of transparency and governance in the development and deployment of generative AI technologies. Many stakeholders, including developers and users, may have limited awareness about the data used to train these models. Without clear guidelines and governance frameworks, there’s a risk of unintended consequences and misuse of generative AI, leading to ethical and regulatory concerns.

SASVATM: Secure and Manageable Enterprise AI

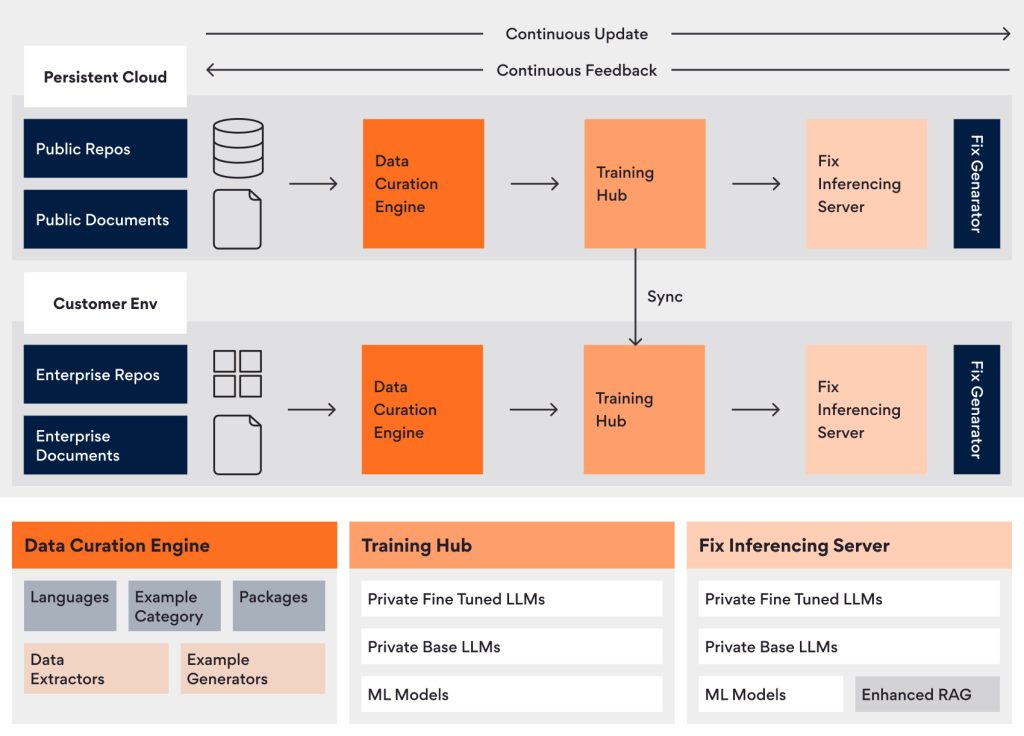

Addressing these challenges, SASVA™ prioritizes security, authenticity, and customization in enterprise AI deployments. Its on-prem deployment model ensures data control, mitigating risks associated with sensitive information exposure. By allowing organizations to train the AI on their datasets, source code, and documents, SASVA™ ensures accurate, relevant, and authentic results. By keeping data within the organization’s boundaries, SASVA™ ensures adherence to internal policies and regulatory standards.

SASVA’s emphasis on small models and specialized data curation techniques ensures accuracy without extensive infrastructure. The optimized inferencing server supports both CPU and GPU inferencing, addressing resource constraints in on-premises environments while maintaining high performance. This approach enables cost-effective AI training and deployment, empowering organizations to leverage SASVA™ effectively.

Once deployed within an organization’s private environment, SASVA™ seamlessly integrates with various enterprise tools, including ticketing systems, code repositories, and document vaults. Leveraging the wealth of data stored in these systems, SASVA™ initiates its learning and training processes, analyzing past releases, tech stacks, coding practices, and other relevant data points. This integration empowers SASVA™ to facilitate targeted learning and personalization, delivering solutions and code tailored to the specific needs of the organization for the next release cycle, based on the theme of the release. By continuously training on customer-specific code bases and documents, SASVA™ ensures that its solutions are precisely aligned with organization’s unique challenges and requirements.

Summarizing: SASVA™ is enabling Secure Enterprise AI Practices

In the words of Uncle Ben, “with great power comes great responsibility.” SASVA™ exemplifies this by ensuring data security and delivering authentic and contextual code and contents. SASVA™ offers faster, smarter, and manageable AI without compromising on performance and security, thereby making it a reliable companion for enterprises in their Software Engineering journey.

Author’s Profile

Anurag Kumar

Product Manager, SASVATM