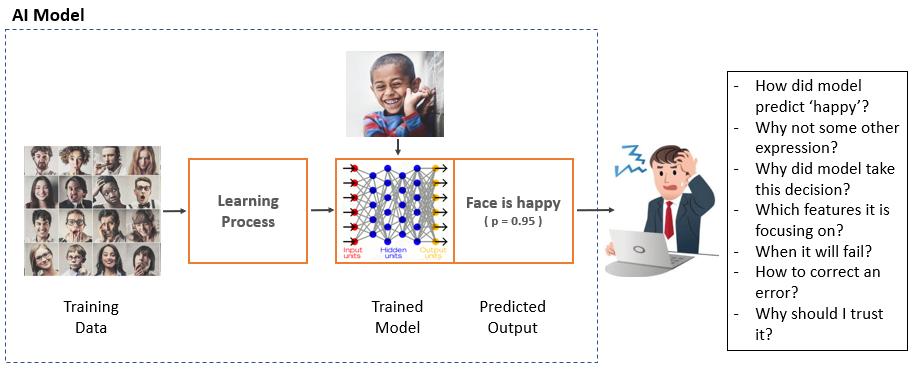

The power of machine learning (ML) is that the models developed can learn intricate patterns in the data and then make predictions on new “unseen” data. Complex ML models encode these data patterns internally and predict the output (Y), given certain input (X) values. While this serves the purpose very well while integrating models into a Software Systems, often the domain expert questions what the internal reasoning behind the model was. Why did it make a particular decision? What features were considered and how were they prioritized to arrive at a decision? These questions become quintessential when these models are responsible for making significant decisions like driving a vehicle on the road or approving or rejecting a loan based on several factors. We cannot afford for the models to be affected by extraneous factors causing the AI system to violate ethical guidelines of our society.

Today, deep neural networks (DNN) are widely used in various applications such as self-driving vehicles, machine comprehension, weather forecasting, credit approval, etc. However, these DNNs are pretty much ‘black box’ models for developers, and they can be tricked very easily. Researchers have proved that deep learning (DL) models can learn irrelevant features or can be fooled by a small tweak in the images. We have examples where using specially made glasses could trick state-of-the-art DL systems into not recognizing the person. Hence, the accuracy of detection (often measured by F1 score) may not be the only metric to consider. We need to open the black box, understand the decision-making procedure, and ethically evaluate the inherent assumptions made by ML models. These are precisely the problems Explainable AI (XAI) tries to solve.

What is Explainable AI?

XAI is a technique that focuses on making ML models understood by humans – it is also known as Interpretable AI or Transparent AI.

Explainability may not be very important when you are classifying images of cats and dogs – but as ML models are being used for the more extensive and critical problems, XAI becomes extremely important. When you have an ML model deciding whether to approve a person for a Bank loan – it is imperative that the decision is not ‘biased’ by irrelevant factors. Similarly, if the ML model is predicting the presence of a disease like diabetes from a patient’s test results, doctors need substantial evidence as to why the decision was made before suggesting any treatment. In machine learning or deep learning, the trained model is a ‘black box’ where designers cannot explain why the model takes a particular decision or which features are considered while making a decision. To get explanations ‘glass box’ models are required, which increase transparency, accountability, and trustworthiness without sacrificing learning performance. The explanation provided by XAI should be easily understood by humans. In other words, it is knowledge extraction from ‘black box’ models and representation in a human-understandable format.

Currently, AI models are evaluated using metrics such as accuracy or F1 score on validation data. Real-world data may come from a slightly different distribution than training data, and the evaluation metric may be unjustifiable. Hence, the explanation, along with a prediction, can transform an untrustworthy model into a trustworthy one.

Why we need XAI?

The performance of deep neural networks has reached or even exceeded the human level on many complex tasks. In spite of their huge success, they are not effective because of their inability to explain their decision in a human-understandable format. It is challenging to get insights into their internal working; they are black boxes for humans. As we move forward with building more intelligent and robust AI systems, we should get to the bottom of these black boxes. There are many fields such as healthcare, defense, finance, security, transportation, etc. where we can not blindly trust the AI models.

The goal of explainable AI systems is:

- Generate high performance and more explainable models

- The generated explanation should be in a human-understandable format which can be trusted upon

There are three crucial blocks to develop explainable AI system:

- Explainable models

The first challenge in building an explainable AI system is to create a bunch of new or modified machine learning algorithms to produce explainable models. Explainable models should be able to generate an explanation without hampering the performance.

- Explanation interface

The explanation generated by the explainable model should be shown to humans in human-understandable formats. There are many state-of-the-art human-computer interaction techniques available to generate compelling explanations. Data visualization models, natural language understanding and generation, conversational systems, etc. can be used for the interface.

- Psychological model of explanation

Humans take most of the decisions unconsciously for which they don’t have any explanations. Hence, psychological theories can help developers as well as evaluators. More powerful explanations will be generated by considering psychological requirements. E.g. a user can rate on the clarity of the generated explanation, which will help to understand user satisfaction. And the model can be continuously trained depending on user rating.

Explainability can be a mediator between AI and society. It is also a useful tool for identifying issues in the ML models, artifacts in the training data, biases in the model, for improving model, for verifying results, and most importantly for getting an explanation. Even though explainable AI is complex, it will be one of the focused research areas in the future.

Explainable AI is a major research area at Persistent Systems. Our AI Research lab is helping banking, healthcare, and industrial customers try to extract explanations from complex ML models. These explanations validated by domain experts can give greater confidence in using ML models for critical tasks. Using state-of-the-art AI Explainability methods in industry and academia, we are trying to bridge the domain knowledge and ML models gap to build systems that are ethical and free from unnecessary bias.

For any questions or more details, please contact us: airesearch@persistent.com

References

Gunning, D. (n.d.). Explainable Artificial Intelligence (XAI). Retrieved from nasa.gov: https://asd.gsfc.nasa.gov/conferences/ai/program/003-XAIforNASA.pdf

Ribeiro, M. T. (2016). “Why should i trust you?: Explaining the predictions of any classifier.” . Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. ACM,.

Molnar, C. (2019). A Guide for Making Black Box Models Explainable.

Wojciech Samek, T. W.-R. (28 Aug 2017). Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models. arXiv preprint arXiv:1708.08296.

Interested in learning more about our AI, ML and Data offerings? Talk to our expert now!